Data ingestion pipeline

Ingestion pipeline

ZADIG XDR offers an efficient data ingestion pipeline that automates the data collection process, ensuring that data from various sources are integrated, processed, and consistently stored according to defined requirements.

Our XDR solution is highly flexible and enables the integration of data from countless types of sources (such as logs from other environments/manufacturers). Indeed, the component responsible for managing the ingestion pipeline can collect data from diverse sources through input plugins such as syslog or TCP. It is worth noting that configuring data collection from a new source can be done at any time, even after the actual deployment of the XDR platform, to accommodate any changes to the monitored organization's infrastructure.

The main log ingestion methodologies supported are:

- SYSLOG Collector

- CSV Collector

- DB Collector

- FTP Collector

- NetFlow Collector

- Windows Event Collector

- Kafka Collector

- HTTP

- Log collection using filebeat

The solution natively integrates Kafka as a log collection technology, for example, for ingesting monitored data from its integrated IDPS module (when applicable), the SYSLOG protocol-based collection methodology for integrating logs from other devices or equipment in the infrastructure, and the collection methodology based on file reading for integrating logs from other components that generate a textual alert feed in files. It is evident that all other collection methodologies can be easily activated based on specific needs.

Below is an example of a Logstash configuration file that enables the integration of logs from a network firewall with the pfSense operating system installed:

input {

tcp {

id => "pfSense-suricata"

port => 5544

type => "suricata"

codec => line

mode => "server"

ssl_enable => true

ssl_certificate_authorities => ["/usr/share/logstash/config/bundle-ca-pfSense.crt"]

ssl_cert => "/usr/share/logstash/config/logger.smart.zadig.cloud_cert.pem.cer"

ssl_key => "/usr/share/logstash/config/key-server.key"

}

syslog {

port => 5514

type => "firewall-1"

id => "pfSense-AzSentinel-1"

}

}

In addition, our XDR solution provides native support for integration with Azure AD. To efficiently collect logs from Azure Active Directory (AD), it is necessary to configure Azure Monitor to export logs to Event Hub. The system features a pre-optimized pipeline for data collection from Event Hub.

To integrate logs from Microsoft AD, the activation of a Windows service on the on-premises domain controller server is required. This software is responsible for collecting and sending logs to the ingestion component.

In more general terms, in the event that any other Identity solution does not support direct integration of logs with our XDR solution and if such a solution integrates data into a Security Information and Event Management (SIEM), it would be possible to collect these logs by configuring the SIEM as an additional direct data source for the XDR platform.

Data Repository

The XDR solution is designed to interact with data repositories located on-premises or in the cloud. In particular, the cloud-native data repositories integrated into the solution are AWS Opensearch and Microsoft Azure Log Analytics, and Elasticsearch on-premises. These repositories support a distributed search engine with an HTTP web interface and documents in JSON format. The data pipeline management component will need to interact with the aforementioned repository to store what is sent from each source using a dedicated output plugin. The only information required by the plugin to correctly save the data within the repository is the address or DNS name of the repository and the index/table containing the data.

Below is an excerpt of the configuration file to save data to an Opensearch cluster on AWS:

opensearch {

hosts => ["https://vpc-aws8672558-oss-ec1-zadig-01-k2qdi2aw6wtmijc5phkvpblzoe.eu-central-1.es.amazonaws.com:443"]

auth_type => {

type => 'aws_iam'

region => 'eu-central-1'

}

index => "logstash-%{+YYYY.MM}"

action => "create"

ssl => true

ecs_compatibility => disabled

#ilm_enabled => false

}

Below is an excerpt of configuration file to save data to Log Analytic

microsoft-sentinel-logstash-output-plugin {

client_app_Id => "cd4d90a2-287c-497d-81d9-fb334ee836d4"

client_app_secret => "t6r8Q~EDcw6NidiKB.vaWpxcNQZtmMN4H2dMcdlm"

tenant_id => "8b344519-45d1-44ff-a276-5a67ae3890ce"

data_collection_endpoint => "https://logs-ingestion-1ogm.westeurope-1.ingest.monitor.azure.com"

dcr_immutable_id => "dcr-0a9941bcd9244ba3a4a15a6bf491b01a"

dcr_stream_name => "Custom-gatewaylogs_CL"

#create_sample_file => true

#sample_file_path => "/tmp"

}

When the repository is in the cloud, through a log collection adapter, also deployed directly in the cloud, it is possible to integrate data sources, supporting various protocols such as Syslog (CEF, LEEF, CISCO, CORELIGHT, or RAW - UDP, TCP, or Secure TCP, allowing the setup of a minimum TLS version of 1.2), CSV, Databases (MySQL, PostgreSQL, MSSQL, or Oracle), Cloud (AWS, Azure, Google), Files and Folders, FTP, NetFlow, and Windows Events.

On-premises, for obvious reasons, are set up any log aggregators that simply collect data from sources on-site, apply entirely customizable parsing logic to the data, and send the results to the cloud. All of this occurs in real-time without retaining any information locally even momentarily.

The on-premise log aggregators support reliable deployment and are compatible with the following hypervisors, both in the cloud and private environments:

• Amazon Web Services (AWS)

• Microsoft Azure

• Microsoft Hyper-V

• VMware ESXi

It is possible to deploy the log aggregators either on a virtual machine (VM) or on a container.

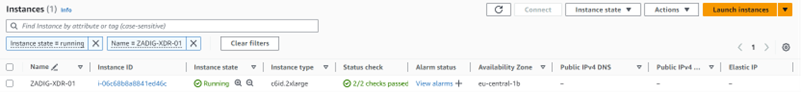

Below is an example of deploying the log aggregator on a virtual machine running on Amazon Web Services (AWS):

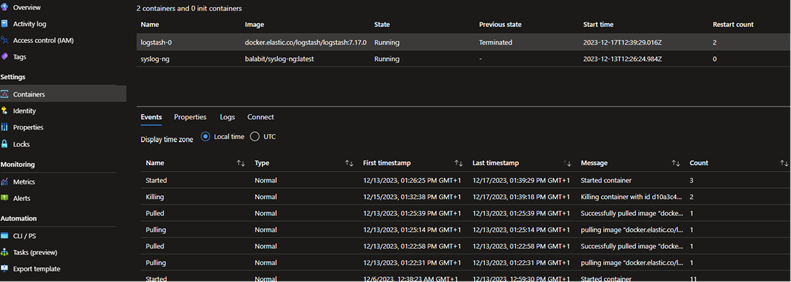

Below is an example of deploying the log aggregators on containers in Microsoft Azure:

Data retention policies

ZADIG XDR natively stores data on Elasticsearch or equivalent cloud storage. The system allows the creation of a data lifecycle policy to activate a specific retention policy, fully customizable based on the needs of the specific organization using the solution.

Data is stored within the repository, divided by indices, and a specific retention policy can be set for each index.

For example, generic data (not related to cybersecurity incidents) is stored in database indices created ad-hoc, possibly categorized, and a chosen retention policy can be applied (e.g., at least 30 days). Data of another nature, such as those related to cybersecurity incidents, is stored in different indices, to which a longer retention period can be applied (e.g., at least 180 days).

In order to retain data (even only certain specific types) for an unlimited time, it is necessary to set the retention policy accordingly.

Our XDR solution allows the division of data retention into hot and cold, and considering certain types of repositories, it enables the addition of a warm retention state.

The categorization is managed as follows:

• Hot: The index is continuously updated and frequently queried;

• Warm: The index is no longer updated but is still queried;

• Cold: The index is neither updated nor queried.

The expansion of storage space, if and when needed, is carried out transparently without causing service interruptions.

Confidentiality of data and communications

The data maintained in the cloud repository are managed with full respect for privacy. They are stored in the organization's monitored cloud space and encrypted both in transit and at rest using sophisticated encryption algorithms, such as at least AES-256. Access to the data is granularly managed and guaranteed only to entities that require access for the functionality of the entire solution.

The communications between the various components of the solution are fully encrypted with the TLS protocol. The minimum TLS protocol version used for encrypting data in transit is TLS v1.2. Each input plugin is configured to accept encrypted communications; in case the plugin does not support TLS, a dedicated TLS proxy is in place for secure data reception from the outside. Similarly, our XDR solution transmits data to the repository using the TLS protocol.

It is specified that each component integrated into our XDR solution provides APIs for automation.